CentOS-7 is now powered by version 3.10.0 of the Linux kernel, with advanced support for Linux Containers and XFS (is a high-performance 64-bit journaling file system) as the default file system. It’s also the first version of CentOS to include the systemd management engine, the firewalld dynamic firewall system, and the GRUB2 boot loader. CentOS 7 supports 64 bit x86 machines. MySQL has been switched with MariaDB.

Systemd

————

- Systemd is a system and service manager for Linux Operating system.

- Systemd uses the command ‘systemctl‘ to manage service instead of service,chkconfig, runlevel and power management commands in the CentOS 6.x

- Systemd is designed to be backwards compatible with SysV init script (using in centos 6.x)

- In Centos & systemd replaces Upstart as the default init system

rpm -qa | grep systemd

Systemd units

- Represented by unit configuration files in /etc/systemd/system

- Encapsulate information about system service, listening sockets,saved system state snapshots.

Systemd file locations:

Directory Description

/usr/lib/systemd/system/ Systemd file distributed with installed RPM package

/run/systemd/system/ Systemd unit file created at run time.

/etc/systemd/system/ Systemd unit file created and managed by the system administrator

How to use Systemctl?

#systemctl stop vsftpd.service

#systemctl stop vsftpd

1) Listing services

#systemctl list-units –type service

#systemctl list-unit-files –type service

2) Displaying service status:

#systemctl status httpd.service

#systemctl is-active httpd.service

#systemctl is-enable httpd.service

3) Starting a service

#systemctl start mysqld.service

4) Stopping a service

#systemctl stop named.service

5) Restarting a service

#systemctl restart vsftpd.service

#systemctl try-restart named.service

#systemctl reload httpd.service

6) Enabling a service

#systemctl enable mysqld.service

#systemctl reenable httpd.service

7) Disabling a service

#systemctl disable vsftpd.service

8) Preventing service from being started manually or by another service

#systemctl mask vsftpd.service

#systemctl unmask vsftpd.service

Working with Systemd Targets

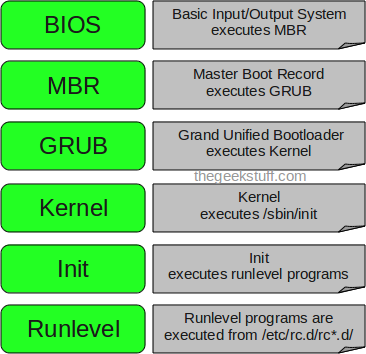

* Runlevels were numbered from 0 to 6 and were defined by a selection of system services to be run.

* In CentOS 7, the concept runlevels has been replaced with systemd targets.

FIREWALLD SUITE

The dynamic firewall daemon firewalld provides a dynamically managed firewall with support for network “zones” to assign a level of trust to a network and its associated connections and interfaces. It has support for IPv4 and IPv6 firewall settings. A graphical configuration tool, firewall-config, is used to configure firewalld,

The new suite in centos 7 will replace iptables in the future the concept of chain and rules are withdrawn and the concept of zone is introduced. Each interfaced is linked to the zone whose property can be interchanged or varied either graphically or using the command firewalld-cmd.

It is dynamic thus not full restart required after a config file edit.

- The concept of chain is removed and the concept of zones are introduced.

- Each interface is linked to a zone. An interface can have only one zone linked to it. But a zone can have more than one interface linked to it.

- There are many predefined zone round which an interface can be placed which are drop, block, public, dmz, work, home, internal and trusted.

- Zone can be configured with the set of units describing the behaviour of the zone.

The essential differences between firewalld and the iptables service are:

The iptables service stores configuration in /etc/sysconfig/iptables, while firewalld stores it in various XML files in /usr/lib/firewalld/ and /etc/firewalld/.

With the iptables service, every single change means flushing all the old rules and reading all the new rules from /etc/sysconfig/iptables while with firewalld there is no re-creating of all the rules; only the differences are applied. Consequently, firewalld can change the settings during runtime without existing connections being lost.

Understanding Network Zones

Firewalls can be used to separate networks into different zones based on the level of trust the user has decided to place on the devices and traffic within that network. NetworkManager informs firewalld to which zone an interface belongs. An interface’s assigned zone can be changed by NetworkManager or via the firewall-config tool which can open the relevant NetworkManager window for you.

The zone settings in /etc/firewalld/

drop

Any incoming network packets are dropped, there is no reply. Only outgoing network connections are possible.

block

Any incoming network connections are rejected with an icmp-host-prohibited message for IPv4 and icmp6-adm-prohibited for IPv6. Only network connections initiated from within the system are possible.

public

For use in public areas. You do not trust the other computers on the network to not harm your computer. Only selected incoming connections are accepted.

external

trusted

All network connections are accepted.

internal

For use on internal networks. You mostly trust the other computers on the networks to not harm your computer. Only selected incoming connections are accepted.

home

For use in home areas. You mostly trust the other computers on networks to not harm your computer. Only selected incoming connections are accepted.

internal

For use on internal networks. You mostly trust the other computers on the networks to not harm your computer. Only selected incoming connections are accepted.

trusted

All network connections are accepted.

It is possible to designate one of these zones to be the default zone. When interface connections are added to NetworkManager, they are assigned to the default zone. On installation, the default zone in firewalld is set to be the public zone.

Configuring the Firewall Using the Command Line Tool, firewall-cmd

- firewall-cmd –state >. displays state of firewallld

- firewall-cmd –reload >> reload firewalld

- firewall-cmd –get-zones >> shows all zones available

- firewall-cmd –get-services >> shows all service supported

- firewall-cmd –get-icmptypes >> shows all the ICMP types supported

- firewall-cmd –list-all-zones >. list sone with features

- firewall-cmd [–zone=<zone>] –list-all >> list feauters of a single zone

- firewall-cmd –get-default-zone >. show the default zone

- firewall-cmd –set-default-zone=<zone> >> set the default zone

- firewall-cmd –get-active-zones >> show zones which are active or bind to interface

- firewall-cmd –get-zone-of-interface=<interface> >> shows zone of interface

- firewall-cmd [–zone=<zone>] –add-interface=<interface> >> adding to zone (default )

- firewall-cmd [–zone=<zone>] –change-interface=<interface> >> changing zones

- firewall-cmd [–zone=<zone>] –remove-interface=<interface> >> remove from zone

- firewall-cmd [–zone=<zone>] –query-interface=<interface> >> check interface in zone

- firewall-cmd [ –zone=<zone> ] –list-services >. shows available service in zone

- firewall-cmd –panic-on/off >> enable/disable panic mode (block)

Disabling firewalld

#systemctl disable firewalld

#systemctl stop firewalld

Start firewalld

#systemctl start firewalld

Locatectl Command

The system locale is set in the file /etc/locale.conf but the setting are limited to the sort order, display language, time format etc. Other important setting include the keyboard layout for consoles and the GUI if the X Server is running. The command localectl can display and control many of these setting.

#localectl status >> to display locale settings

#localectl set-locale LANG=en_GB.utf8 >> to set the Language

#localectl list-locales >> to lists locales

#locale list-keymaps >> list keyboard mappings

#locale set-keymap uk >> sets the key map

Timedatectl Command

In CentOS7, A new command used to set date and time is “timedatectl“. this command is distributed as part of the systemd system and service manager. you can use this command to change the date and time, set the time zone, check the current date and time or others

To find list of all available time zones,

#timedatectl list-timezones

To set timezone

#timedatectl set-timezone time_zone

In this example, set timezone to America/Chicago

#timedatectl set-timezone America/Chicago

#timedatectl set-time YYYY-MM-DD >> To set date

timedatectl set-time 2014-07-19

#timedatectl set-time HH:MM:SS >> To set time

timedatectl set-time 15:12:00

#timedatectl set-ntp yes >> enable ntp server

Hostnamectl command

We can change host name by modifying the /etc/sysconfig/network in centos and other linux systems, but it did not take an effect of the modification. Even after multiple reboot of server.

The procedure to change the host name in CentOS 7 is now totally different from the previous versions.

In CentOS/RHEL 7, there is a command line utility called hostnamectl, which allows you to view or modify hostname related configurations.

CentOS 7 supports three class of Host Names:

Static – The static host name is traditional host which can be chosen by the user and is stored in /etc/hostname file or is the normal hostname set with hostname command usually FQDN

Transient – The “transient” hostname is a temporary hostname assigned at run time, for example, by a DHCP or mDNS server

Pretty – hostname is allowed to have a free-form (including special/whitespace characters) hostname, presented to end users

#hostnamectl status

To view static, transient or pretty hostname only, use “–static”, “–transient” or “–pretty” option, respectively

#hostnamectl status [–static|–transient|–pretty]

To change all three hostnames: static, transient, and pretty, simultaneously:

#hostnamectl set-hostname <host-name>

Used to set hostname remotely(-H option using )

#hostnamectl set-hostname -H username@hostname

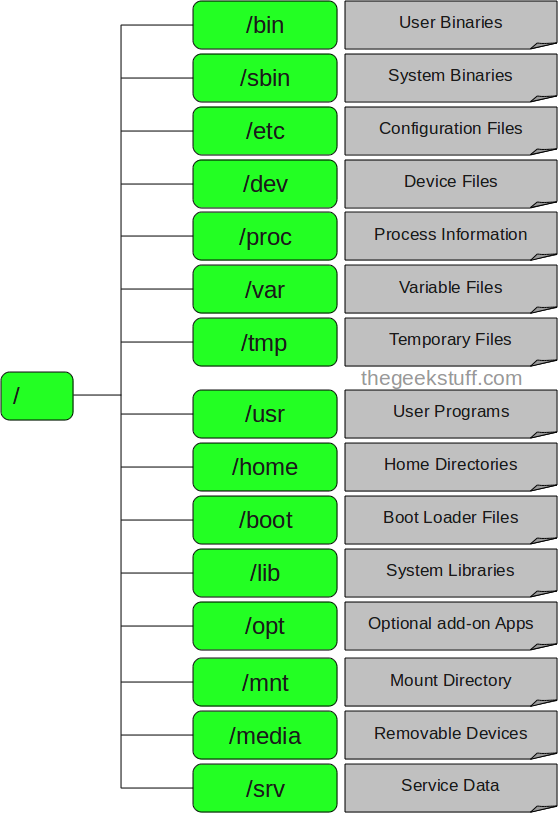

Root file system change

* The /bin, /sbin, /lib and /lib64 directories are now under the /usr directory.

* The /tmp directory can now be used as a temporary file storage system (tmpfs).

* The /run directory is now used as a temporary file storage system (tmpfs). Applications can now use /run the same way they use the /var/run directory.

/tmp and /run directory

- Offers the ability to use /tmp as a mount point for a temporary file storage system (tmpfs).

- When enabled, this temporary storage appears as a mounted file system, but stores its content in volatile memory instead of on a persistent storage device. No files in /tmp are stored on the hard drive except when memory is low, in which case swap space is used. This means that the contents of /tmp are not persisted across a reboot.

to enable and disable this service

# systemctl enable/disable tmp.mount

- Files stored in /run and /run/lock are no longer persistent and do not survive a reboot.

httpd package

- Configuration file contain less configuration compared to previous version of the htpd, it is hard copied to manuals installed in /usr/share/httpd.

- Uses a single binary and provides these Multi-Processing Models as loadable modules: worker, prefork (default), and event. Edit the /etc/httpd/conf.modules.d/00-mpm.conf file to select which module is loaded.

- Content previously installed in /var/cache/mod_proxy has moved to /var/cache/httpd under either the proxy or the ssl subdirectory.

- Content previously installed in /var/www has moved to /usr/share/httpd including icons, error and and noindex(new)

- Module for the previous versions not supported

- Configuration files that load modules are now placed in the /etc/httpd/conf.modules.d directory. Packages that provide additional loadable modules for httpd (like the php package) are added to this directory. Any configuration files in the conf.modules.d directory are processed before the main body of httpd.conf. Configuration files in the /etc/httpd/conf.d directory are now processed after the main body of httpd.conf.

- /etc/httpd/conf.d/autoindex.conf configures mod_autoindex directory indexing

- /etc/httpd/conf.d/userdir.conf configures access to us

New Packages

Chrony

Provided as an alternative for ntpd package. Its not well compatible with the features of ntps, for such reason ntpd package persist but it is deprecated.

#yum install -y chrony

The Chrony configuration is in the /etc/chrony.conf file.

Start the service

# chkconfig chronyd on

# service chronyd start

HAProxy

It comes as a in house load balancer for http connections. The package assigns the load to the workers in a manner described and returns the result the inquirer. Thus act as a proxy which listen to a specific port. The manner usually round robin or other can be specified in the configuration file including the workers and the check list. Against which the worker is tested for the stability and removed it if check fails.

Kernal Patch

kaptach and other utils are introduced to provide dynamic kernel module editing

https://fedoraproject.org/wiki/FirewallD

https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Security_Guide/sec-Using_Firewalls.html